Bots, bucks, and the illusion of momentum

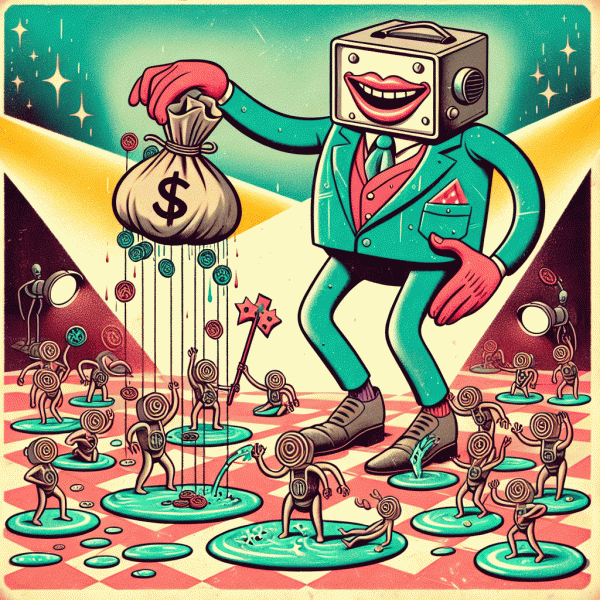

The social proof black market is a carnival of glitzy numbers: sudden spikes in likes, perfect like to follower ratios, a slew of anonymous profiles and comments that read like canned templates. That shimmer seduces decision makers into thinking momentum is real. Behind the curtain there is a mixture of bot farms, click farms and microtransactions that convert cash into perceived popularity. The result is a superficial leaderboard that can mislead product teams, sales teams and advertisers into trusting vanity rather than value. That illusion is not harmless. It warps algorithmic feedback loops, drains budget, and can poison brand trust when inorganic activity is exposed. The good news is that a little curiosity and a few practical checks convert suspicion into clarity.

Start with the simplest audit. Look at the timeline of engagement rather than a single snapshot: genuine interest builds in waves across posts and formats, not in a single overnight cliff. Check comment quality and diversity; genuine comments reference specifics, use natural language and come from accounts with varied histories. Examine follower age distribution and growth velocity; a legit account rarely adds thousands of followers in a day without corresponding reach. Cross reference top engagers with their own feeds to see if they are real participants or recycled shells. Watch for geographic mismatches between audience and campaign targeting. If vendor work is involved, ask for provenance and invoices for the impressions. These checks do not require mystery tools, only attention and a baseline analytic routine.

When paid engagement is detected take a measured approach. Pause paid amplification on suspicious assets, isolate affected cohorts in analytics and annotate them so future reporting does not inherit false signals. Clean your data by excluding inorganic spikes from conversion and LTV models. If a partner sold fake interactions escalate the issue to the platform, document the evidence and request remediation. Then reallocate budget into tactics that create durable attention: better creative testing, genuine micro influencers with verifiable audiences, community driven formats and conversion experiments that rely on owned channels. Over time quality investments compound while fake momentum collapses once the spending stops.

Finally, treat this as governance not a one off audit. Build simple guardrails into campaign playbooks: baseline engagement tests, approval steps for list buys, periodic third party audits and a culture that rewards depth of relationship over surface metrics. Use experiments to measure signal quality so teams learn to value metrics that predict business outcomes rather than those that only look pretty on a dashboard. Above all, keep a skeptical eye and a practical toolkit. Numbers can be seductive, but only signal that is tied to real user behavior will sustain growth. Focus on signal, not shimmer.

How fake signals hack real algorithms

Algorithms are glorified pattern detectors, and fake signals are little mirages that look exactly like positive patterns until you get close. Bought likes, bot comments, and engagement pods create sudden surges in the metrics platforms care about most: early reaction rate, comment velocity, view completion, and share frequency. That early surge is treated like a thumbs up by recommendation engines, so a piece of content can go from invisible to viral in minutes on the surface. The problem is that this is counterfeit momentum. Underneath the shiny spike there is often no real interest, no repeat visits, and no downstream conversions, and those are the signals that actually sustain long term growth.

So how do fake signals work their magic? They game the heuristics that platforms use to predict future engagement. Signals are not just raw counts; they are patterns over time, cohorts, and content types. Fake engagement introduces anomalies: unusually high like to follower ratios, comment streams filled with one word or emojis, engagement that arrives in tight synchronized bursts from newly created or inactive profiles, and view counts that climb without corresponding increases in watch time or clicks. A hacked signal will push content into larger pools for testing, and if the platform still sees poor retention or weak conversion, it will eventually downgrade the item. That delay can cost real creators the prime window of discovery.

The consequences are practical and painful. Teams optimize toward the wrong creative, advertiser budgets are wasted, A/B experiments become noisy, and trust with both users and platforms erodes. Platforms are aware and constantly updating detection, which means bought engagement is a brittle short term cheat that can trigger penalties, reduced distribution, or outright account actions. The healthier path is to treat engagement as a compound metric, not a vanity moment: prioritize retention, clicks that lead to action, saves, and meaningful comments over raw spikes. Measuring those downstream outcomes exposes the difference between fake applause and real interest.

Here is a compact playbook to stay ahead. Audit: run regular follower quality checks and flag accounts with no profile history or generic usernames. Test: run control experiments where a subset of posts are promoted organically only, then compare retention and conversion across cohorts. Clean: remove suspicious followers and disable engagement vendors immediately. Invest: shift budget from purchased signals to creative testing and community activation that generates repeat visitors. Monitor: set alerts for abnormal spikes in engagement velocity and track watch time and conversion alongside raw likes. Think like a detective, not a desperate advertiser: the dirty tricks can buy a headline, but real algorithms will reward the work you do to earn attention over time.

When to rent attention and when to build it

Deciding whether to rent attention for a campaign or build it over time is like choosing between a turbocharger and a slow-burn wood stove. Both heat things up, but one gives an immediate jolt while the other becomes the backbone of your cozy future. Rent attention when you need scale, predictability, or a fast experiment. Build attention when you need trust, repeat visits, and a channel that pays dividends. Think of rented attention as a rented billboard on a busy highway and built attention as a favorite local coffee shop where regulars bring friends. Both have value; the trick is to match the tool to the job.

Make the decision with three simple signals. If time is short, budget is flexible, and conversion goals are measurable within campaign windows, renting is the logical choice. If your product needs education, community, or repeat purchase behavior, invest in building. If you cannot choose, split the difference: use rented attention to jumpstart reach and use that data to inform what you build. Here is a small checklist to speed the call:

- 🆓 Speed: Need immediate traffic or test results? Rent attention to shorten learning loops.

- 🚀 Scale: Want predictable volume for launches or offers? Paid channels give the control you need.

- 🐢 Durability: Prioritize building if lifetime value and community are the real goals.

When renting attention, set clear success metrics and an exit plan. Track cost per acquisition, quality of users, and how many rented leads convert into repeat customers. If conversion quality is low, do not double down blindly; use that spend to learn which creative or audience segments actually stick. And when you are ready to turn rented attention into owned attention, capture contact points, subscriptions, or micro-conversions that feed your long term funnel. If you want a pragmatic place to test micro-tasks or short gigs that can amplify reach while monetizing community actions, check out get paid for tasks for ideas and inspiration.

Finally, treat this as a loop not a ladder. Rent to learn, build to keep, and alternate as conditions change. Run one tidy experiment every month, measure what flows into your owned channels, and invest parts of your budget in both strategies until your metrics clarify the better balance. The best teams do not worship either option. They use rented attention to buy them time and data, and they pour the insights into building assets that earn attention for free tomorrow. That is how short sparks become steady fires.

Red flags that mean you are lighting budget on fire

If your paid engagement program feels like watching money evaporate, there are a handful of unmistakable warning signs. The most obvious is a flood of clicks with almost no conversions: high CTR but near-zero downstream actions means attention was cheap and useless. Equally toxic are abnormally high bounce rates, extremely short session durations, or traffic concentrated in odd geographies or device types that never buy. Look for suspicious spikes in referral sources or dozens of conversions coming from a single IP range. Another red flag is runaway frequency where the same users see an ad ten times a day; creative fatigue does not equal conversion. Finally, if your conversion costs suddenly diverge from historical baselines without an organic cause, consider that budget is being burned for low quality signals.

When you spot these signals, perform a rapid triage rather than a panicked pause. First, validate the data: confirm that tracking tags and events are firing correctly, reconcile ad platform reported conversions with analytics or server logs, and verify UTM consistency. Then segment performance by placement, creative, device, time of day, and geography to identify the worst culprits. If a placement or publisher shows excessive clicks but zero engagement, pause it and blacklist it for future buys. If a creative has huge impressions but abysmal on-site behavior, swap it out and test a variation. Use conversion windows and attribution settings to ensure you are not miscounting view throughs as meaningful wins.

After containment, apply targeted fixes that preserve momentum while cutting waste. Tighten targeting with negative keywords, audience exclusions, and placement exclusions. Introduce frequency caps and cap bids on underperforming devices or regions. Shift to value-based bidding if possible, so the platform optimizes toward quality actions rather than raw clicks. Invest a small portion of budget into creative and landing page tests — often poor creative to conversion fit is the root cause. Consider adding a basic click-fraud filter or work with a fraud detection partner if bot traffic shows up. And critically, standardize a single source of truth for conversions so optimization decisions do not chase noisy numbers.

None of these moves require a full reboot of paid activity, but they do require discipline. Start with three quick wins: pause the worst performing placements, fix or validate tracking, and run a 14 day controlled test of tightened targeting plus new creative. Build a simple dashboard that highlights CTR versus conversion rate and cost per conversion by placement, and schedule a weekly audit. Treat budget like a bonsai rather than a bonfire: trim aggressively where growth is impossible, water where the roots show promise, and measure like your next campaign depends on it, because it does.

A safer playbook for buying engagement without getting burned

Buying engagement without getting burned starts with a mindset shift: think like a cautious scientist, not a gambler. Before ever sending a dollar, write down the hypothesis you want to test, the minimum success signal that will prove it, and the maximum loss you will tolerate. Translate broad goals into micro metrics that matter to the business rather than vanity numbers. For example, prefer a predictable lift in clickthroughs, time on site, or qualified leads over a fuzzy promise of thousands of impressions. Keep the experiment small, measurable, and time boxed so that every outcome teaches you something.

Vetting is where most marketers get saved or burned. Ask for raw proof, not polished decks. Request recent analytics exports, sample follower lists, and unedited screenshots of campaign dashboards. Watch for common red flags: engagement that arrives in identical batches, comment threads with the same phrasing, followers with empty bios and no history, or sudden spikes that match nothing in the creator content calendar. Use basic pattern recognition and simple tools to check whether likes and views trail follower counts plausibly. Strong red flags mean walk away; suspicious but not fatal items mean test at low scale.

Run controlable pilots and attach verification to payment. Start with a tiny spend and always use trackable links and UTMs so you can measure downstream behavior. Structure deals with milestone payments and a modest holdback until an agreed set of KPIs is verified. If the goal is conversions, pay for conversions or quality leads rather than raw clicks. If the desire is awareness, layer in viewability and time-on-content metrics. Require creator transparency about audience makeup and agree on creative rights and usage up front. Keep a strict testing cadence: change only one variable at a time so you know what to scale and what to kill.

Monitoring and exit rules keep small fires from becoming infernos. Automate baseline alerts for odd traffic patterns but pair automation with periodic human audits so nuance is not missed. Keep a living blacklist of vendors and channels that failed verification, and rotate partners so you do not become overexposed to a single risky source. Finally, invest in creative that encourages real interaction; better creative amplifies genuine engagement and makes it easier to spot fake activity. If you want hands on micro tasks or a starting point for low risk experiments, check online jobs for beginners for practical ways to get quick, verifiable labor that supports careful scaling.