Fake Love, Real Lift: How Algorithms Reward Noisy Signals

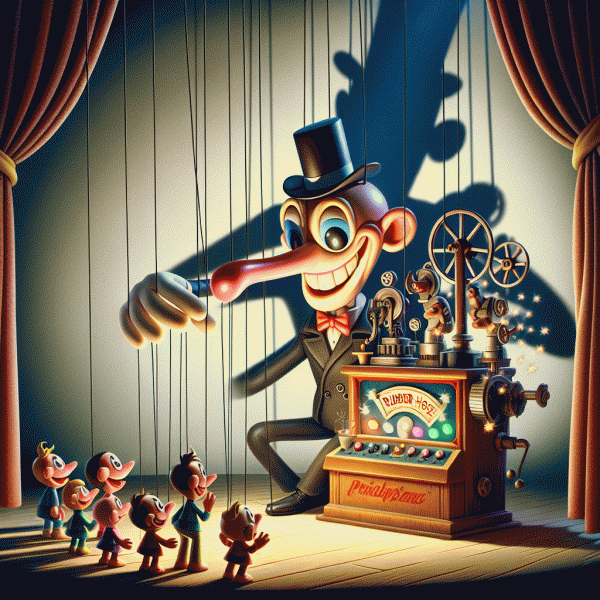

Algorithms aren't gullible so much as lazy: they reward clear, repeatable signals because those are easy to optimize for. When marketers buy likes, seed engagement pods, or rent bot armies, they inject loud but meaningless noise into the system. That noise looks like attention on the surface — a spike, a glossy like count, a string of short comments — and platforms interpret it as proof of value. The result is a temporary visibility boost that feels real, because the machine learning models that control reach were built to favor momentum over nuance.

Under the hood this is a feedback loop. Early engagement acts like fertilizer for distribution: a post that gets traction in the first minutes is more likely to be shown to more people, which generates more metrics, which in turn signals relevance. Problem is, fake engagement can trigger that loop without any underlying quality. Bots can click and like en masse, engagement pods can leave shallow one-line comments, and automated watchers can inflate view counts. The algorithm doesn't understand intent — it only sees numbers — so it dutifully amplifies content with noisy but timely signals.

The consequences are painfully practical. Paid engagement can deliver a measurable lift in impressions, reach, or short-term conversions, but it eats at long-term ROI. It trains platforms to promote spectacle over substance, erodes real social proof, and can even lead to penalties when platforms detect inorganic behavior. More subtly, it distorts your analytics: high CTR but low time-on-site, lots of likes but few saves or purchases, or traffic coming from odd referral chains are all red flags that the lift was synthetic. In plain terms: you may ‘‘win’’ a vanity metric while losing true audience trust and sustainable growth.

So how do you spot and stop noisy signals? Start with pattern recognition: look for sudden, unnatural spikes; a high like-to-follower or like-to-save ratio; identical or templated comments; and referral sources that don't match your usual audience geography. Use cohort and retention analysis rather than surface-level totals — real users will show meaningful downstream behavior (time on page, repeat visits, conversions). Instrument early warning systems: anomaly alerts on engagement velocity, a manual review of new top referrers, and sampling sessions to verify authentic interaction. These diagnostics turn guesswork into action.

Finally, invest in signal quality instead of shortcuts. Seed campaigns with micro-influencers who can spark genuine conversation, optimize creative for depth (hooks that encourage replies or saves), and prioritize metrics that correlate with business outcomes (dwell time, conversions, LTV). Test paid amplification incrementally and pair it with conversion tracking so you know whether reach translates to value. The dark shortcut will always tempt marketers because it works temporarily, but the smarter play is to teach the algorithm to love your content for the right reasons — real engagement scales, fake love collapses.

Vanity vs Value: Spot the Metrics That Actually Move Revenue

Clicks feel like applause: loud, immediate, and emotionally satisfying. That is the problem. So many teams mistook volume for victory and built dashboards that read like popularity contests. Start by mapping your customer journey and labeling which metrics actually sit on the revenue path. Impressions and likes are great for brand air cover but they are not cash registers. Build a simple funnel for your product or service, then classify metrics by funnel stage. When every metric is treated as a north star, you end up optimizing for noise. Instead, choose a primary metric that moves dollars and a few diagnostic metrics that explain why it moved.

Here are three compact, action oriented metrics to replace vanity noise with signals that scale:

- 🚀 Conversion: Measure the percentage of users who complete a meaningful action that directly leads to revenue, such as trial to paid or add to cart to purchase. Track both short term conversion rate and conversion velocity.

- 🐢 Retention: Look at cohort retention over weeks or months rather than one day active numbers. A sticky cohort drives lifetime value and makes acquisition spend repeatable.

- 💬 Revenue: Track ARPU, CAC and LTV together. Monitor margin per customer, not just top line revenue, so growth is sustainable rather than expensive.

Turn those signals into operational routines. Run holdout experiments to prove incrementality so that paid spikes are not just shifting timing. Use cohort level analysis to detect whether a campaign brings better customers or simply more cheap clicks. Set attribution windows based on buying behavior; shortening a window to chase last click will favor cheap attention but harm long run ROI. Pair experiments with predictive LTV modeling and a simple payback timeframe for acquisition spend so the trading desk and finance team can agree on affordable bids.

Finally, put guardrails in place and iterate fast. Create a weekly scorecard that shows one primary revenue metric, two diagnostics and one experiment outcome. If an initiative moves clicks but not conversion, pause or restructure it into a funnel optimization test. Reward teams for improving monetizable outcomes instead of vanity trophies. The dark art of paid engagement works because it exploits attention, but by shifting focus from applause to cash flow you keep the upside while closing the loopholes that turn clicks into expensive illusions.

Risky Business: Shadow Bans, Trust Erosion, and Brand Whiplash

Buying attention can feel like a shortcut — until the lights go out. Platforms get suspicious of activity patterns and quietly throttle reach: accounts that once showed up in feeds find themselves talking to a void. That's a shadow ban — your posts aren't removed, they're just demoted to the algorithmic attic. Symptoms include sudden falloffs in impressions, engagement concentrated among your own followers, and a weird mismatch between what your analytics show and what your team sees in the wild. The kicker? You might not get a notification, just a slow leak in performance that eats at budgets and morale.

Next comes trust erosion. Audiences are smarter than we give them credit for; they can smell canned comments and recycled engagement. When people notice inflated follower counts or generic praise, your brand's authenticity score takes a hit: fewer shares, fewer referrals, and a record that lives forever on screenshots and commentary. Fixing that is expensive and slow. An immediate move: run a full audit of paid tactics and social accounts, remove obviously fake followers, and install routines for regular sentiment checks. Make your metrics about people, not vanity numbers — prioritize DMs, conversions, and longitudinal customer retention over shiny but hollow stats.

And then there's brand whiplash — when your audience's perception snaps from curious to concerned overnight. A campaign that looks clever in a briefing room can look deceptive on a timeline. Exposure can trigger not only consumer backlash but regulatory scrutiny if claims are misleading. Build in guardrails: diversify traffic sources so one channel's penalty doesn't flatten the business, require explicit disclosure in influencer posts, and maintain an ethical playbook with red flags that halt any tactic that trades short-term clicks for long-term trust.

Practical next steps you can act on today: Monitor: set alerts for sudden drops in impressions and listen for spikes in negative sentiment; Test small: run experiments at scale that limit risk; Measure quality: use retention, conversions, saves and meaningful replies as primary KPIs; Be transparent: label paid partnerships openly and build real collaborations; Plan for fallout: craft a response playbook and rehearse it. Doing fewer, more honest moves wins over gimmicks every time. In short: don't make your growth a magic trick—make it a relationship.

If You Must Pay: Smarter Plays That Do Not Torch Credibility

Paying for attention isn't a sin — it's a tool with a very sharp edge. The sin is hauling out that tool without a plan and watching your community get scorched. If you must sprinkle budget on engagement, think like a scientist, not a billboard salesman: define the precise behavior you want (shares, saves, trial signups), pick tiny pockets of spend to test hypotheses, and insist on short windows so you can stop the bleeding fast. Aim for experiments that teach — even losers should tell you why audiences recoil. Set guardrails: max spend per test, minimum uplift to scale, and a kill switch if sentiment slides.

Make paid outcomes feel earned, not rented. Use three compact promises to guide every buy:

- 💁 Testing: Run small A/Bs on creative, CTA, and targeting before you pour money in; treat each campaign like a hypothesis, not hope.

- 🚀 Fit: Match format to platform and tone — a stiff sell on a friendly community feed will fail; native placements and contextual relevance convert better than blunt interruptions.

- 👥 Disclosure: Be honest about sponsored posts or incentives; transparent partnerships build trust faster than any stealthy viral stunt.

Operationally, translate those promises into processes. Deliver tight creative briefs with desired outcomes, sample lines that feel human, and absolute no-go phrases that betray your values. Prefer user-generated formats and micro-influencers who actually use your product — their endorsements read like recommendations, not ads. Route paid traffic to purpose-built landing experiences that continue the story instead of slapping a bannered headline on the homepage. Instrument everything: UTM tags, short links, cohort retention, and qualitative feedback. If a paid push spikes clicks but tanks day-7 retention or prompts complaints, it's doing damage despite glossy numbers.

Keep budgets nimble and decisions reversible: start with tiny buys, tweak creatives weekly, and reallocate to winners quickly. Celebrate small wins that improve lifetime value over vanity metrics, and fold learnings into organic content so your audience gets consistent storytelling rather than disjointed sell-scripts. When you pay, pay for authenticity — creative freedom within brand rules, fair compensation for creators, and clear disclosure. That way you get the reach you need without selling out the one thing that lasts: credibility. Spend smart, measure ruthlessly, and let integrity scale your returns.

The Exit Plan: Weaning Off Paid Hype and Keeping the Momentum

Think of paid hype as training wheels that help the bike get moving while the rider learns to steer. Start the exit plan with a clearly defined horizon and measurable checkpoints so every cut is deliberate. Choose one primary metric that will prove paid traffic translated into sustainable demand — for example first week retention, activation rate, or repeat purchase frequency — and set minimum quality thresholds for leads, creatives, and landing pages. Create an 8 to 12 week timeline with weekly reviews and three formal checkpoints where the team either reduces spend, maintains the current cadence, or rolls a small rollback back if needed. Align incentives across marketing, product, and customer success so no single team treats a cut as a surprise.

Run the taper like a laboratory experiment rather than a panic move. Reduce budget in defined increments — for instance 20 percent, then 30 percent of the remaining spend, then another 30 percent — and observe performance after each step before proceeding. Keep the highest performing channels but prune the long tail that only produced shallow clicks. Convert some creative testing spend into low cost validations such as smaller geographies, narrow interest segments, or timebound promos. Reserve a dedicated holdback pool equal to 5 to 10 percent of peak spend and use it to validate organic replacements and recovery plays. Frequency cap heavy audiences, shorten creative rotation to two week cycles to avoid fatigue, and document every adjustment so the team learns which levers truly move long term value.

Protect and expand owned channels so momentum does not vanish when paid steps back. Repurpose paid creative into email subject lines, short form social clips, blog posts, product page copy, and help center content. Build onboarding sequences and product tours that capture the conversion boost paid delivered and aim to convert that same cohort into retained users. Invest in SEO around the high intent queries that paid revealed and launch small partner or referral programs to amplify word of mouth. Tie product roadmap priorities to signals that paid uncovered — sometimes a single tweak in onboarding or pricing converts a paid spike into a durable flywheel. Even modest improvements to conversion funnel steps can change the economics enough to make the next reduction painless.

Measurement becomes the safety net during the exit. Use cohort analysis and LTV to CAC comparisons as the backbone of your decision making and insist on controlled experiments before broad rollbacks. Simulate a true cut by pausing campaigns in a few test markets or user segments and observe the conversion cascade; that controlled experiment will reveal whether the funnel can stand without paid. Build dashboards with guardrail alerts for key signals such as percentage drop in conversion, CPA drift, and churn velocity, and define statistical thresholds up front — for example minimal detectable effect and a 95 percent confidence threshold — so the team avoids chasing noise. Track longer attribution windows when relevant, and have a preapproved rollback playbook tied to each alert.

Turn this plan into a compact runbook that defines who reduces which line items, what KPIs get checked at each checkpoint, and how freed budget will be redeployed into retention or product fixes. Celebrate small wins when organic channels fill gaps and keep the creative testing cadence alive so learnings compound. Treat paid as a scalpel rather than a crutch: use it precisely to discover demand and signals, log outcomes, then try to run without it. The darker truth about paid is not that it works, but that it can hide structural problems; exit with curiosity, not panic, and convert those paid signals into systems so momentum survives the taper and growth becomes more sustainable over six months and beyond.