Optimization or Obfuscation? 5 Tests to Check Your Conscience

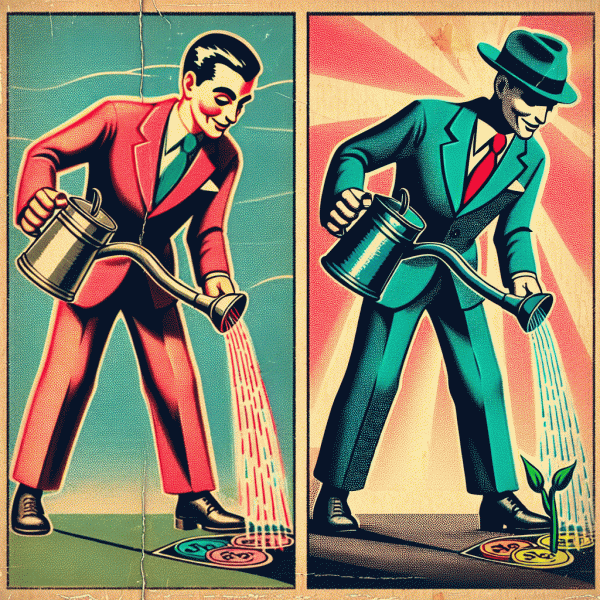

You can feel good about spikes, dashboards, and the SEO fairy dust — but not all lifts deserve champagne. Run these conscience checks like a pre-flight checklist: quick, objective, and a little embarrassing if you fail. The goal here isn't to be puritanical about growth, it's to separate real value from clever accounting. If an experiment makes you squirm when you explain it to your grandmother (or to Legal), you probably need to iterate. These five tests fit into daily standups and post-mortems; they're designed to be done in minutes, not boardroom therapy.

Test 1 — Visibility: Open the product to someone who doesn't work on it and watch. If they can't explain what changed in thirty seconds, you optimized for obfuscation, not users. Action: create a one-sentence changelog and timestamped screenshot or short clip; if it reads like marketing spin, refactor. Test 2 — User Benefit: For every metric you lift, name the human benefit in plain language. If your sentence is "increase lifetime value," translate it: "this helps Jamie finish checkout without a coupon crunch." If you can't, you're optimizing a number, not an experience.

Test 3 — Consent and Context: Did you restrict choices, hide costs, or push nudges until users feel trapped? Small micro-confirmation steps and clear exit paths fix a lot. Action: simulate a full user journey, including error states and back buttons; count how many times a user is surprised. Test 4 — Fairness Check: Segmented wins look great until you realize they exclude people with slower devices or different languages. Action: run your variant against low-bandwidth and non-default locales and ask whether the lift persists. If it disappears, you're engineering fragility, not growth.

Test 5 — Replicability and Audit Trail: Can someone reproduce the result three months later? Keep rollouts, cohort dates, sample sizes, and the exact hypothesis recorded. If the experiment can't be reconstructed, it's not knowledge, it's noise. For quick reference, use this tiny checklist when you're about to ship:

- 🚀 Transparency: Can you explain the change in one sentence?

- ⚙️ Intent: Does the stated benefit map to a real user outcome?

- 👥 Outcome: Will this still help a diverse set of users?

The Dirty Dozen: Tactics That Trigger Penalties, Backlash, or Both

Think of the "Dirty Dozen" as the cheat codes marketers whisper at late-night conferences: quick lift, immediate bragging rights, but often the price is an ad ban, a PR bonfire, or months of lost trust. These twelve tactics live on a thin ledge between clever growth and outright grift — they work because they exploit human urgency, platform blind spots, or regulatory gray areas. The point of calling them out isn't moralizing; it's practical: some shortcuts destroy the very signals (account health, authentic engagement, legal safety) you need to scale. Before you hit 'boost,' run a five-minute risk check: who loses access, who can sue, and who will be loudly offended on social? If any answer includes legal teams, major partners, or a likely viral complaint, put that tactic in the slow cooker.

Here are three of the most combustible offenders to watch for right now:

- 💥 Deceptive Claims: Ads or landing pages that overpromise results ('Get perfect skin in 7 days') or falsely imply endorsements; platforms and regulators treat demonstrable consumer harm as a red line, and it's the fastest route to takedowns and fines.

- 🤖 Bot Engagement & Fake Metrics: Buying followers, auto-comments, or artificially inflating views to manipulate algorithms — sure, your vanity metrics spike, but your learning signal gets polluted, your reach quality collapses, and platforms are increasingly sophisticated at flagging inorganic patterns.

- 💩 Dark Patterns & Forced Upsells: Hidden fees, pre-checked opt-ins, or convoluted cancellation flows that trick users into paying; these produce immediate conversions but also chargebacks, legal headaches, and scathing viral posts that outlive any short-term gain.

The remaining nine are just as dangerous even if they're less flashy: cloaking content to bypass reviewers; flooding review sections with fake testimonials; repurposing influencer creative without rights; scraping personal data to microtarget; bait-and-switch pricing; abusing lookalike audiences formed on illicit lists; cookie-stuffing to claim credit for conversions; buying traffic from sketchy networks; and mis-targeting ads to protected groups. What unites them is simple — they optimize for a one-time conversion and corrode the platform signals, human trust, and legal standing that enable sustainable growth. Replace tactics that depend on deception with process fixes: require evidence for bold claims, keep provenance records for social proof creatives, establish vendor vetting for any third-party traffic, and run a compliance checklist before any campaign goes live.

Here are some tactical moves you can implement this afternoon to avoid becoming the cautionary tale: add a three-question red-team review ('Is this claim provable?', 'Who suffers if it's false?', 'Could a regulator or platform flag this?'); set automated thresholds that pause campaigns with sudden conversion spikes pending human review; keep a transparent corrections and refund policy to defuse chargebacks; insist on signed rights and usage logs for influencer content; and freeze-scaling for two weeks on winners that rely on high-pressure psychology. Growth that survives scrutiny is growth you can celebrate at scale — it's sustainable, saleable, and far more satisfying than a one-week vanity boost followed by an account suspension.

Paid Amplification Done Right: Transparent Moves That Scale Safely

Paid amplification is not a magic money tree; it is a precision instrument. When you treat it like one, it sharpens reach without sacrificing reputation. Start by making value explicit: tell prospects what you are promoting, why it matters, and what success looks like for them. Transparency is not optional marketing fluff. It is the lubricant that keeps conversion rates rising instead of customer trust falling. Frame your ad creative around honest outcomes, and let analytics confirm what you promised.

Operationalize transparency with systems, not platitudes. Use deterministic tracking where possible, layer in privacy-forward solutions like conversion APIs and server-side events, and standardize UTM parameters so every click maps to an outcome. Define three clear KPIs per campaign (for example, Awareness: reach, Acquisition: CPA, Retention: LTV) and publish them internally. Spend allocation should be auditable: record who approved budgets, why audiences were chosen, and what creative variants ran when. This makes scaling a repeatable process and turns "boost" into a responsible lever.

Run experiments that favor clarity over cleverness. A/B test creative hypotheses alongside ethical signals: are you using dark patterns to drive immediacy? Is messaging ambiguous about pricing, trials, or renewal terms? Swap tricks for transparency and watch engagement quality improve. Control frequency to avoid ad fatigue; heavy rotation of the same promise feels pushy, while honest storytelling builds familiarity. Leverage authentic social proof and real user outcomes; nothing scales trust like demonstrable results that align with your claims.

Before you expand spend, perform a five-minute ad audit that anyone on the team can follow. Confirm metrics alignment (campaign KPIs match business goals), consent hygiene (tracking respects privacy choices), creative truth (claims substantiated), and reporting rigor (raw data stored and versioned). If the audit passes, scale incrementally and keep the loop tight: small lift, measure, repeat. If something trips the audit, fix it before pouring more budget. The best growth feels inevitable because it was earned, not engineered; that subtle difference is the line between effective amplification and feeling like a bait‑and‑switch. Choose the former, and the machine you build will reward both revenue targets and reputation.

Red Flags in Your Metrics: Sudden Spikes, Hollow Clicks, and Bot Breath

Picture your analytics dashboard as a crowded party where everyone's shouting about a mysterious guest who just walked in with a limo. Sudden spikes, floods of clicks that don't behave like humans, and ghostly conversions are those uninvited guests — flashy, distracting, and expensive if you give them the VIP treatment. Instead of reflexively scaling what looks good, learn to interrogate the pattern: was that surge driven by real intent, a seeded placement, or something pretending very convincingly to be attention?

Spotting a spike that isn't real: the signatures are oddly consistent. Traffic that arrives in precise batches, from a single country you never targeted, with 100% new sessions and zero downstream engagement is suspicious. Look for identical UTM strings, a sudden drop in conversion rate despite rising clicks, or contemporaneous jumps in a single placement. Practical next steps: segment the spike by source and device, compare it to historical baselines, and timestamp the first hit to see if it coincides with a creative or bid change. If the surge collapses as soon as you pause the campaign, that's a big hint.

Hollow clicks: your CTR can be a cruel seducer. High click-throughs paired with razor-thin session duration, single-page visits, or a vanishingly low event rate mean you're buying attention, not outcomes. Instrumentation is your friend here: add meaningful events (scroll depth, video plays, key button clicks) and session recordings on landing pages so you can tell genuine interest from drive-by curiosity. Filter placements by engagement score, exclude low-quality partners, and consider raising minimum viewability or time-on-page thresholds in your ad buys.

Bot breath and fake engagement: bots don't always announce themselves with riddles; often they whisper through repeat IP ranges, identical user agents, or unnaturally fast pacing between pages. Third-party traffic vendors can amplify this if you're not careful. Defenses that actually work include server-side request logging, checking for repeated GCLIDs or tracking IDs, implementing CAPTCHAs on critical form flows, and using a reputable bot-mitigation layer or fingerprinting service. On the analytics side, create a “quality traffic” view, apply filters that remove suspicious IP ranges, and set alerts for abnormal spikes in short-session traffic.

Here's a tiny audit you can run in 48 hours: isolate the spike, confirm geographic and device diversity, validate conversion paths with event-level data, and pause any line items that fail two of those checks. Then put guardrails in place — minimum engagement KPIs, automated alerts, and a small-scale validation campaign before you crank spend. In short: interrogate the applause before you hand out encore tickets. It's cheaper to investigate now than to sponsor a headline that turns out to be fake.

Build Moats, Not Mirages: Sustainable Engagement That Outlasts Algorithms

Algorithms are like weather: dramatic, newsworthy, and impossible to predict on a long timeline. A boost can feel like sunshine for your metrics, but what you really want is a foundation that survives the storm. Treat growth like compound interest rather than fireworks: design experiences that nudge people back day after day, not just convince them to click once. That means building a product that earns attention through usefulness, a culture that invites return visits, and content that keeps delivering value long after the platform flips its strategy.

Start with the product moment that matters most and make it impossible to skip. Map the first hour and the first seven days of use, then remove friction and add small wins until time to value is obvious. Create low-cost rituals that reward repeat behavior: a checklist that clears over time, a weekly digest that surfaces personal wins, or a native event that turns passives into participants. Make feedback explicit and immediate so users know their actions matter; this is where product design and community practice meet to form a real moat.

Measure the right signals so you avoid mistaking mirages for momentum. Track retention cohorts and activation funnels instead of raw vanity counts. Look for leading indicators like second-week retention, feature adoption rate, and invited-user conversion. Run small experiments with holdout groups and rolling launches to see which changes actually move these indicators. Complement quantitative work with quick qualitative calls and open channels for user stories; the most actionable insights about habit formation will come from observing how people actually use the surface you built.

Give your roadmap a simple rhythm: fix the first experience in the next 30 days, establish community rituals and evergreen content in 60, then double down on the features that show compounding returns by 90. Avoid buying attention that evaporates the moment the ad budget stops. Instead, invest in frictionless value, social loops that reward contribution, and content scaffolding that keeps working when the algorithm shifts. Build what people return to because they love it, not because some feed served it to them once.