Meet the earners: students, night owls, and global pros

Think of the micro-task economy as a college dorm party where three personality types always linger by the punch bowl: the student cramming cash between classes, the night owl harvesting tasks while everyone sleeps, and the global pro who treats pennies like puzzle pieces to build a paycheck. Each shows up for different reasons—flexibility, spare-time monetization, or income diversification—and each faces the same truth: most tasks are priced like pocket change. That doesn't make the work worthless; it makes strategy essential. Certain task categories—quick surveys, image labeling, content moderation, short transcriptions—repeat across platforms, and winners study approval windows, rejection causes, and batching opportunities. Once you stop treating every hit as isolated and start viewing them as combinable units of time, tiny payments begin to add up into something meaningful rather than maddening.

Students win on schedule optimization and speed. With classes, labs, and social lives, they turn micro-tasks into micro-sprints: 20-minute focused bursts between lectures that prioritize tasks with clear instructions and fast approval. Quick rule: pick tasks you can finish end-to-end in one sitting and aim for platforms with predictable payments. Set up simple browser templates or keyboard text expanders for repetitive entries, use a cheap second device or phone hotspot when campus Wi‑Fi is flaky, and run a one-week experiment to calculate your real hourly rate. If the number's too low, stop and regroup rather than grinding through long drags. Think of those short windows as high-yield study breaks—treat them like concentrated assignments with a deadline, not background noise.

Night owls exploit time zones and lower competition—prime real estate if you're disciplined and caffeinated. When your local market is sleeping, request pools refill, and approval queues can move faster. That said, the busiest late-night batches often pay the least per unit, so be selective. Favor tasks with crystal-clear instructions, keep headphones handy for audio checks, and use a tiny scoreboard to track tasks completed, average payout, and approval rate. Pro move: rotate between platforms based on where high-pay, low-competition tasks surface at night and raise your minimum-acceptance threshold as your approval history improves. Over weeks, those steady late-night sessions compound into reliable supplemental income—especially when you eliminate low-yield work and protect your effective hourly.

Global pros treat micro-tasks as a polished instrument in a diversified gig toolkit. They automate the repetitive bits, bulk-apply to better-paying task types, and factor currency conversion into their rate calculations—sometimes a regional advantage matters. They also know why you keep seeing pennies: platform design, oversupply of workers, geographic pricing tiers, and automated tooling that compress payouts. The solution isn't rage-clicking; it's methodical optimization. Actionable trio: (1) set a personal minimum hourly number and refuse tasks beneath it, (2) batch similar tasks to cut context-switching overhead, and (3) export weekly stats into a simple spreadsheet to prune chronic low-performers. Add reputation management—fast, accurate submissions and polite communication—and you'll get access to better batches. Do this, and the crowd of students, night owls, and pros stops looking like people resigned to pennies; they become a community of micro-entrepreneurs assembling a surprisingly solid income from tiny, well-chosen pieces.

What pays and what pretends: AI tuning, data labeling, and tiny tasks that add up

Micro-tasks are a mixed bag: some are tiny, honest gigs that reward speed and attention, and others are clever-looking traps that pay pennies for minutes. The ones that truly "pay" tend to have clear instructions, reproducible tasks, and requesters who value consistent outputs — think image bounding boxes with example frames, or sentiment labels with a rubric and quick feedback. The ones that "pretend" often give vague surveys, hide rejection rules, or require unpaid screening rounds. If you treat this like a tiny business instead of a scavenger hunt, you start to see patterns and can favor gigs where time turns into real earnings.

Here are three quick markers to separate decent work from time-sinks:

- 🤖 Quality: Good tasks include examples, edge-case rules, and instant feedback or a test hit; that means fewer rejections and bonuses for accuracy.

- 🐢 Pace: Tasks that can be batched or automated with shortcuts let you convert minutes into higher hourly rates; single-click surveys rarely scale.

- 🚀 Payout: Look for explicit time estimates, transparent acceptance rates, and bonus opportunities — those numbers help you figure real hourly value.

When the work is AI tuning or data labeling, you're often not just clicking — you're training models. That brings both opportunity and risk: tasks like prompt-rating, transcription cleanup, and nuanced labeling can pay better because they demand judgment, but they also invite picky rejections. Practical moves: run a test batch to measure real time per hit, read the guidelines twice (and save example images or notes), and follow requester patterns so you can avoid tasks with unpredictable QC. Join the platform's worker forums to catch shady gigs fast and to learn which requesters actually tip or offer rework. Finally, diversify: combine steady, low-variance labeling jobs with higher-paying tuning tasks so the pennies stop feeliing random and start feeling like a deliberate portfolio. If you optimize for quality, pace, and clear payouts, those tiny tasks can add up into something worth your time — and maybe even fund your next splurge.

The earnings equation: seconds per click, acceptance rates, and ruthless dollars per minute

Dollars per minute = (pay per task ÷ seconds per task) × 60 × acceptance rate — and that compact formula explains why so many micro‑taskers end the day with pennies. The math is merciless but simple: shave seconds or protect your approvals and your effective pay climbs; lose approvals or waste time and the prettiest price per task means nothing. For example, a 2‑second click at $0.02 with a 90% acceptance rate nets about $0.54 per minute, while a 5‑second, $0.01 task at 80% acceptance falls under $0.10/min. Tiny changes in seconds or acceptance multiply across an hour, and that's the secret to turning clicks into actual cash.

Acceptance rates are more than a badge — they determine whether the hours you log translate into income. Rejections cost you time, future access, and negotiating power with requesters; they also force you to factor a 'waste' multiplier into every offer you consider. Platforms learn patterns too: if your completion times creep up, the system may route lower‑pay batches your way. So always translate advertised pay into an effective hourly figure using a realistic seconds‑per‑task and your acceptance estimate. If you don't, you're budgeting on fiction instead of dollars.

Practical tweaks beat wishful hoping. Reduce seconds per click by creating clipboard templates, learning keyboard shortcuts, and batching identical items so you don't reset your mental context between tasks. Pre‑scan instructions before you accept to avoid rejections, favor requesters with good approval histories, and keep a tiny timer and log to compute real DPM for the task types you do most. Small rules — say, only doing batches above a target DPM at your expected acceptance rate — let you filter out the penny traps. Remember: a slightly lower DPM with 99% acceptance is usually worth more than a flashy DPM that evaporates with one mistake.

Want a fast win? Try the free micro‑task calculator and the one‑page cheat sheet linked below to instantly see how seconds and rejections change your hourly picture, or use the checklist to spot sketchy requesters before you start. Optimize the seconds, protect the acceptances, and you'll stop being surprised by pennies and start recognizing real, repeatable earnings.

Platform secrets unmasked: queues, quality scores, geo pricing, and why your feed looks the way it does

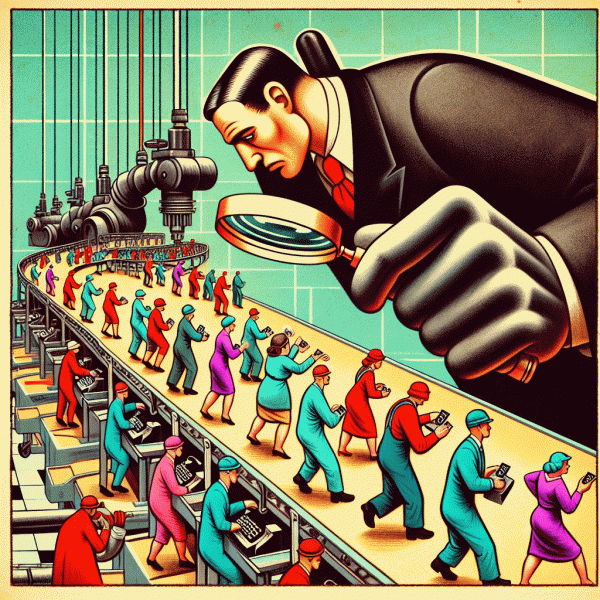

Think of micro-task platforms as theatrical stage managers rather than neutral bulletin boards. Behind the bright task tiles is a choreography of queues, buyer rules, and algorithmic experiments that decide which workers get which roles. Requesters slice labor into private batches, split tests, and fallback pools; platforms then throttle supply to control fraud and quality while protecting requester budgets. The visible result is a patchwork feed where some workers are handed the juicy, high-paying pieces and many others see the skimmed pennies. That fragmentation is intentional: it rewards predictable outcomes for buyers and builds a hierarchy of access that only looks chaotic from the audience.

Quality scores are the secret gradebook that moves people through that hierarchy. These scores combine correctness, completion speed, rejection history, and sampled audits into a hidden number that platforms use to route tasks. Newcomers sit at the back until they demonstrate repeatable accuracy; midlevel workers lose premium access after a few flagged answers. Practical moves matter: specialize in a narrow task type so your error pattern becomes consistent, accept small vetted batches early to accumulate clean history, and treat every audit as the next gatekeeper. Keep rejection rates negligibly low and aim for near-perfect spot checks to graduate into better queues.

Underneath the UI there are three levers you can learn to read and influence:

- 🐢 Queue Priority: Platforms rank workers and tasks into tiers; accept low-reward starter batches and log acceptance times to discover high-turnover windows that feed better jobs.

- 🤖 Quality Score: Accuracy, speed, and reliability form a hidden composite; focus on flawless micro-hits, use checklists to avoid careless mistakes, and treat corrections as signals, not failures.

- 👥 Geo Pricing: Requesters often set regional pay bands; instead of risky workarounds, learn which time zones have higher demand, partner with peers in higher-paying locales, or rotate schedules to catch premium windows.

If you are tired of chasing pennies, act like a market analyst and not a treasure hunter. Track task earnings by requester and time, keep a simple spreadsheet for task type ROI, and automate repetitive typing inside platform rules so accuracy does not suffer. Batch similar tasks to reduce cognitive switching costs, prioritize jobs that produce clean audit samples, and diversify across platforms so a single algorithmic penalty does not erase your income. Join community hubs to spot requester reputations early, and never sacrifice compliance for a quick bonus. These are not overnight hacks; they are practical steps to transform a scattershot feed into a predictable, slowly climbing income stream.

Level up playbook: workflows, browser boosters, and habits that turn cents into real wins

Small payouts can feel like noise until someone shows how to tune the radio. Treat micro tasks like a production line, not a side hustle hobby: design a repeatable process, reduce friction, and measure outcomes. Start by mapping the five most common task shapes you see on your dashboards and assign a reliable cadence to each. That map becomes your playbook. When every click has purpose, those pennies stop leaking away and start forming predictable drops into the same bucket.

Workflows win when they are simple and repeatable. Build a one screen checklist for each task type: open app, select template, apply quick filter, validate, submit. Use a short timer to create urgency and prevent perfection paralysis. Save common text snippets in a clipboard manager and assign hotkeys for the three most frequent responses. Replace random hunting with batch sessions: do identical tasks for 25 minutes, break 5, then switch task shape. That rhythm increases speed and reduces error, which means more accepted gigs and fewer wasted minutes.

Browser boosters change the game. Add an autofill extension, a tab manager to group task flows, and a lightweight screenshot tool for fast evidence capture. Use one browser profile for earning and another for everything else to avoid cookies and suggestion clutter. Track conversions with a simple spreadsheet column: task started, task completed, net payout, time spent. That metric will reveal the true hourly rate. If you want curated options to test, visit tasks with instant payout to compare platforms and find gigs that match your boosted workflow.

Habits matter as much as hacks. Commit to a two week experiment where you only accept tasks that fit your map and measure every session. Reinvest a small portion of rare wins into productivity tools or a better microphone if surveys reward voice clips. Celebrate micro milestones to avoid burnout: hit a streak of accepted tasks and treat yourself to one small reward. Over time the process compounds: faster handling, fewer rejects, and smarter platform choices convert pennies into moments where you actually notice growth.