The Truth About Pay-to-Play: When Boosts Beat Organic

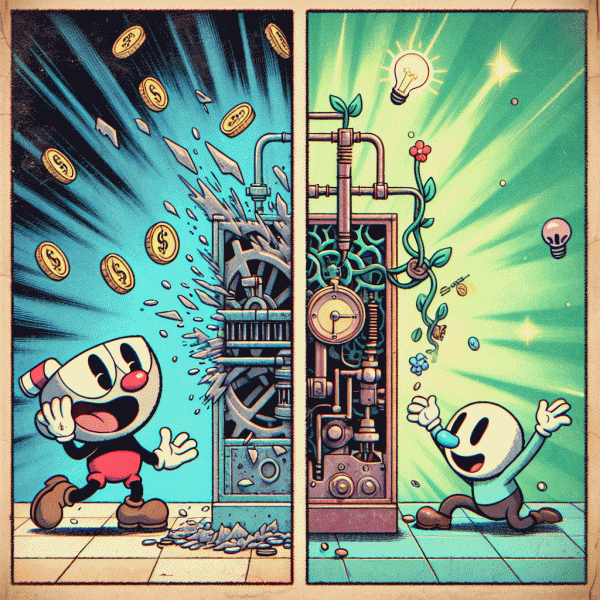

Paid boosts are not a moral failure or a shortcut to be ashamed of; they are a tool. Use them like a scalpel rather than a sledgehammer and they will cut through noise, not blow a hole in your budget. The core truth is simple: paid wins when speed, control, and clear measurement matter more than slow organic signal building. If you have a launch date, a time limited offer, a thin organic audience, or a hypothesis about creative that needs fast validation, paid can deliver the sample sizes and reach you need to decide with confidence.

Here are three pragmatic moments when paying to play tends to outpace pure organic effort:

- 🚀 Scale: Rapidly reach a sufficiently large and relevant audience to hit learning thresholds and statistical significance.

- ⚙️ Test: Execute systematic creative and audience experiments at controlled spend levels to find winners without burning a native feed with noise.

- 🔥 Signal: Seed the algorithm with engagement and conversions so platform models can learn faster and surface your content more naturally later.

Turning those moments into wins requires guardrails. Set clear CPA or ROAS targets before you start, then run short, high cadence experiments to identify top performers. Include control or holdout groups to measure incrementality and avoid mistaking lifted impressions for real lift. Use frequency caps and audience exclusions to limit overlap and creative fatigue, and pace budget ramps so cost per action does not spike. When a creative wins, amplify; when it tanks, kill it fast and reassign budget. Track downstream metrics like retention or LTV, not just first touch, so paid activity feeds a sustainable growth model.

Finally, think hybrid. Use paid as a seeder and organic as the amplifier: surface winners quickly with ads, then repurpose those assets across owned channels and community programs to lower future acquisition cost. Plan a 30 to 90 day playbook that defines entry criteria, scale triggers, and exit rules, and treat paid as an iterative learning engine rather than a permanent prosthetic. Do this and paid becomes less of a money pit and more of a growth accelerator.

Budget Busters: How Much to Spend Before Diminishing Returns

Think of ad spend like pouring water into a funnel. At first the funnel runs dry and every dollar makes a noticeable splash: clicks, signups, sales. But after a point each extra dollar splashes in the same puddle and the lift becomes negligible. The trick is to find that inflection point where marginal gains drop below what the business needs to justify additional spend. Treat spend as an experiment with clear measurements: incremental CPA, marginal ROAS, conversion rate by cohort, and lifetime value of the customers you actually acquire from that channel. Run small step tests instead of a single big leap and measure returns incrementally every seven to fourteen days.

Start pilots with strict stop rules. For a new channel cap the pilot at a fixed amount relative to your monthly marketing budget, for example 5 to 15 percent, or a flat band such as 1k to 5k depending on company size. Use incremented spend bursts: increase budget by 20 to 40 percent only after performance stabilizes for one measurement window. Track the exact cohort you are funding so that attribution tells a truthful story instead of mixing signal with noise. If a channel cannot beat your target CPA or marginal ROAS after two consecutive ramps, pause and analyze creative, landing page, and audience tweaks. For quick traffic validation you can also post a task for website visits to stress test conversion assumptions before committing larger budgets.

Here are three quick heuristics for when to scale or stop:

- 🚀 Scale: If marginal ROAS is higher than your target and volume is not constrained, increase in 25 to 50 percent increments and monitor for conversion decay.

- 🐢 Plateau: If cost per acquisition rises while conversion rate slips, hold spend and run creative or funnel experiments rather than pouring more budget.

- 💥 Stop: If marginal ROAS falls below break even or if customer quality declines, cut spend and reassign to higher-performing channels.

Finally, turn data into a budget map. Build a simple dashboard that logs spend tiers, marginal ROAS, CPA, and post-acquisition behavior for each tier. Use that map to set three buckets: test, scale, and sustain. The test bucket lets you discover working combinations; the scale bucket is for channels and creatives that clear your profitability bar; the sustain bucket funds proven winners while you work on retention and LTV uplift. Always be ready to reallocate: a channel that was a goldmine last quarter can become a money pit today if creative or market conditions change. Keep decisions time boxed and rule driven, and growth will come from disciplined experiments rather than hope.

Targeting Tricks That Turn Boosts Into Bookings

Think of boosts as loudspeakers, not magic wands. A boost that reaches everyone annoys most and converts few. The smart play is to aim the speaker at people who already hum the tune you want them to love. Start by slicing audiences into tiny, testable cohorts based on recent behaviors and intent signals: recent page visits, cart nudges, quick-return users, and high-engagement content readers. Treat each cohort like a mini-campaign with its own creative and offer. That reduces waste, improves relevance, and makes the numbers behind each tactic much less fuzzy when you measure results.

Next, craft messaging that matches stage and motive. Do not use the same call to action for someone who visited once a month ago and someone who added to cart yesterday. Use urgency and incentives sparingly for high-intent segments, and educational, low-pressure content for the earlier-stage folks. Pair targeting with frequency caps and timing windows so you avoid spamming warm prospects. Run micro A/B tests that change only one variable at a time: audience definition, creative, or landing page. That will tell you what actually moves bookings rather than what merely feels good.

Seed lookalikes from your best converters and keep refining those seeds by LTV cohorts. If you need a place to practice or find fresh audience ideas, test a task marketplace to observe task-level intent and reward structures, then scale winners into paid channels. Use exclusion lists aggressively: exclude recent converters, current customers on a membership path, and internal funnels that have their own communications. Attribution windows matter: track short-term booking signals alongside early-stage lead signals so you know which audience yielded bookings and which only yielded clicks.

Budget and bid like a value investor. Allocate more to segments with consistent CPL and rising conversion rates, and pull back from segments that bleed impressions with no lifting of bookings. Implement bid multipliers for time of day, device, and top-performing geos. Combine that with dynamic creative optimization so the right creative variant finds the audience most receptive to it. Also keep a small, persistent holdout to guard against seasonality illusions; a steady control group will tell you whether boosts are generating incremental bookings or just harvesting demand that would have converted anyway.

Wrap this into a short operating rhythm. Each week, score cohorts by conversion rate, CPL, and early-LTV signals; each month, promote the top 2 cohorts and retire the bottom 2. Keep a rolling experiment log with hypotheses, sample sizes, and winners. When a segment proves reliable, scale slowly with strict guardrails. That way boosts stop being a money pit and become a predictable lever you can pull to turn attention into actual bookings.

Creative That Clicks: 3 Thumb-Stopping Formats to Test

Attention is the new currency, and creative is where you either earn interest or burn the account balance. Stop hoping the algorithm will rescue a boring asset: in 2026 the difference between profitable boosting and a money pit is whether your ad makes someone stop scrolling. Start with clarity—what single idea do you want to land in three seconds?—then pick a format that magnifies that idea. The point isn't to obsess over production polish; it's to create clear, testable assets that expose which storytelling mechanics actually move metrics for your audience.

Format 1 — Vertical micro-video (6–15s): Keep the first two seconds sacred. Jump into an emotion (surprise, curiosity, relief) and use quick visual beats instead of explanation. Use captions, hard cuts, and a single bold visual prop so viewers process the concept instantly even with sound off. Produce a silent-native version and a sound-on variant with a short, catchy audio logo. Metric checklist: view-through rate (VTR) to validate attention, CTR for relevance, and CPM to judge efficiency. Quick production tip: shoot with a phone, stabilize, and export a 9:16 master, then lop it to 6–8s clips for rapid iterations.

Format 2 — Swipe-story carousel: Treat each card like a mini headline that leads to the next—don't cram a full product page into one frame. A simple narrative arc works best: Problem → Quick Demo → Social Proof → CTA. Add a bold text overlay on every card so the message survives mute and scan; include a strong visual anchor (the product, a face, a distinctive motion) that repeats across cards. Carousels are perfect for sequential messaging experiments—test different ordering, card counts, and CTA phrasing to see which path maximizes incremental clicks and lowers CPAs.

Format 3 — Raw UGC + creator-native edits: Authenticity scales when you give creators a "frame" rather than a script. Share 3 creative prompts (hook idea, one product benefit, a compelling ending) and let creators use their voice. Then, repurpose those assets into polished ads by adding subtitles, a fast intro cut, and a 2–3 second branded sting. This hybrid approach retains believability while enabling brand-safe performance testing. Track conversion lift and post-click engagement to separate charm from actual purchase intent—UGC that gets likes but not clicks is just entertainment, not an ad.

How to test without losing your shirt: run a lean factorial: 3 formats × 3 audiences × 2 CTAs = 18 cells, keep budgets low per cell for 48–72 hours, then double down on the top 2 performers. Only change one variable at a time and measure against clear stop/scale rules (e.g., stop if CPI/CPA is 50% worse than baseline after the learning window; scale if it's 20% better). Refresh creative every 2–3 weeks to beat ad fatigue and log performance context (season, placement, audience) so you learn patterns, not flukes. Small, fast tests beat big, slow bets—especially when your creative is the lever that decides whether boosting becomes growth hacking or a money pit.

Smarter Than the Button: When to Switch to Full Ads Manager

Think of the boost button as a power nap for a single post: quick energy, low commitment, and sometimes all you need. But when you start craving sustained growth, precise targeting, or predictable cost per action, the one-click sprint becomes a treadmill. Watch for clear signals that you need the full Ads Manager toolkit: your reach is stuck, cost per engagement is creeping up, frequency is climbing and creative fatigue kicks in. Those are not bugs, they are features telling you that scale and control are next on the to do list.

Concrete triggers make the choice simple. If you are getting fewer than the minimum conversions needed for reliable optimization on your objective, it is time to change tactics; aim for consistent conversion volumes of 40 to 100 per week per campaign so the delivery algorithm has data to learn. If cost per acquisition moves more than 20 percent above target, or if frequency gets over 3 to 4 and CTR drops, the boost route is wasting money. Also switch when you need multi creative testing, split testing audiences, or separate budgets by funnel stage instead of lumping everything into one promoted post.

When you flip the switch, start by translating the boosted post into a proper campaign objective that matches business outcomes: conversions, catalog sales, app installs, or store visits. Create ad sets for clear audience buckets rather than a single blob. Use Campaign Budget Optimization for broad scale or set manual budgets when you want tighter control during tests. Run at least three creative variations and consider dynamic creative so the algorithm can recombine headlines, images, and CTAs. Set proper tracking, tag creatives with UTM parameters, and, if relevant, implement Conversions API to keep data flowing reliably.

Audience hygiene and structure will pay dividends fast. Build lookalikes from high value actions, keep exclusion lists for recent converters, and seed lookalikes with enough users to avoid overfitting. Start with narrower ad sets to exit the learning phase quickly, then broaden and consolidate once metrics stabilize. If bidding becomes chaotic, test manual bid caps or target cost to regain predictability. Make creative refresh a scheduled habit: swap visuals or angles every 10 to 14 days when frequency climbs.

Finally, treat the Ads Manager as a lab, not a magic wand. Set guardrails with automated rules to pause sky high CPAs, run periodic lift tests to validate incremental value, and monitor ROAS alongside leading indicators like CTR and purchase rate. If three rounds of optimization do not move the needle, iterate on proposition and landing experience rather than pouring more budget into the same setup. Swapping out of the boost button is not an admission of failure, it is a move to smarter spending and sustainable growth.